Technology and development approach of Advanced Conversational AI

Just as the automotive industry is redefining itself through electric vehicles, conversational AI also requires a change. New platforms, processes and tools based on generative AI are essential to further develop this technology and exploit its full potential.

Generative AI can transform customer service in the long term.

However, there are two fundamental challenges:

- Currently available conversational AI tools only enable simple applications with Large Language Models (LLMs) and are neither efficient in using LLM capabilities nor cost-optimized for productive use

- In dialogue applications based purely on generative AI, the decisions made at runtime are no longer comprehensible and hallucinations jeopardize the use. However, the new EU AI Regulation provides a framework that is intended to guarantee the safe and transparent use of AI systems!

With over 20 years of experience in developing highly complex conversational AI solutions, we have created a new generation of conversational AI platforms. SemanticEdge leverages the benefits of LLMs to fully take advantage of the conversational intelligence and development speed of LLM-based conversational AI solutions. At the same time, we minimize or eliminate potential disadvantages of LLMs to ensure optimal results.

Do you have any questions?

Please contact us with your thoughts by e-mail to info@semanticedge.de or by calling +49 30 3450770

Differentiation of SemanticEdge – Our Generative AI Technology and Benefits

Generative AI approach

- Task optimized LLM usage

- Extensive knowledge bases for fine-tuning

Advantages:

- Minimization of hallucination risks and costs

- Transparent AI decisions

- Optimization of reaction speed and security

Dialogue development

- Auto-generated dialogs

- Configurable dialog templates

Advantage:

Significantly faster development of dialogue modules and industry solutions

Time-to-Market

- Configurable, ready-to-deploy fine-tuned industry solutions and dialog modules

Advantage:

Go live in weeks and not months with years of fine-tuning

Dialogue Quality/Intelligence

- Dynamic, highly personalized dialogues

Advantages:

- Higher customer satisfaction

- Higher levels of automation

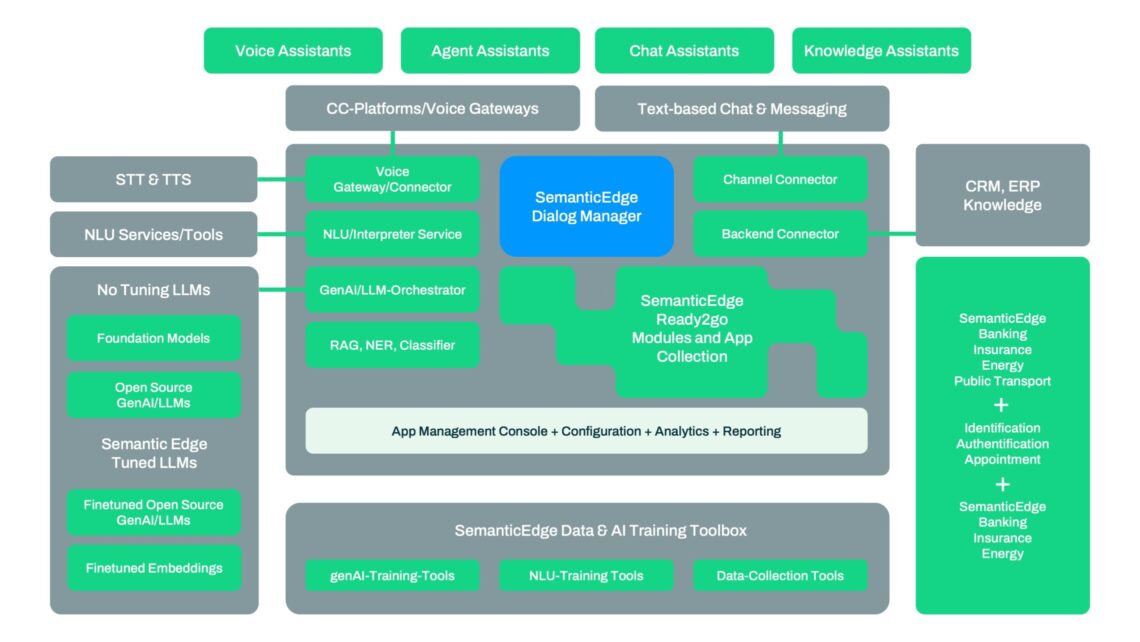

SemanticEdge Architecture AI Assistant with Generative AI

Ready-to-use AI assistants and knowledge bases:

A wide range of industry-specific dialog modules and knowledge bases and many industry-independent dialog modules tuned in productive operation enable fast go-live and high levels of automation

Automatic dialogue generation:

While dialogue development in the development environments of other providers is still based on rigid flow diagrams, the SemanticEdge Conversational AI platform can also be used to generate dialogues automatically based on genAI. This is faster and more cost-effective and the expressiveness can be significantly increased

Task-oriented LLM orchestration:

The LLM Orchestrator component integrates different LLMs into an application in a task-oriented manner (closed-source models such as GPT or Gemini, but also open-source models). It can also use fine-tuned LLMs on-prem with the knowledge bases. This reduces LLM costs, guarantees full transparency of AI decisions, and minimizes hallucination risks

GenAI tool pipeline for preparing the data:

For the creation and fine-tuning of the respective industry data pools or genAI models, the SemanticEdge Conversational AI platform offers appropriate tools and pipelines, e.g. to prepare mass customer statements and make them available for an AI system or to compare LLM performance. The quality of the data and the fine-tuning possibilities are crucial for the success of the solution

Extensive configuration at runtime:

The multi-tenant AI assistants are highly configurable via the Management Console. Customer service and the specialist departments can make far-reaching application changes at runtime in a role- and rights-controlled manner without dialogue design experience and gain detailed insights into performance. The MC also uses generative AI to provide deep insights into the course of the dialogue in order to ensure the desired security and transparency

Omnichannel Integration:

The integration of the Conversational AI platform into the existing omnichannel CC infrastructure, any existing NLUs and the fronends and backends takes place via the Telephony/CC Platform, the Channel, NLU/Interpreter Service and the Backend Connector

Versatile AI assistants:

The AI assistants can be used in various use cases: Primarily as voice assistants in the central hotlines, as chat assistants in the various digital channels, as knowledge assistants for natural language searches for information in various document formats, or as agent assistants to support CC agents during conversations or chats